CBAM,代填坑

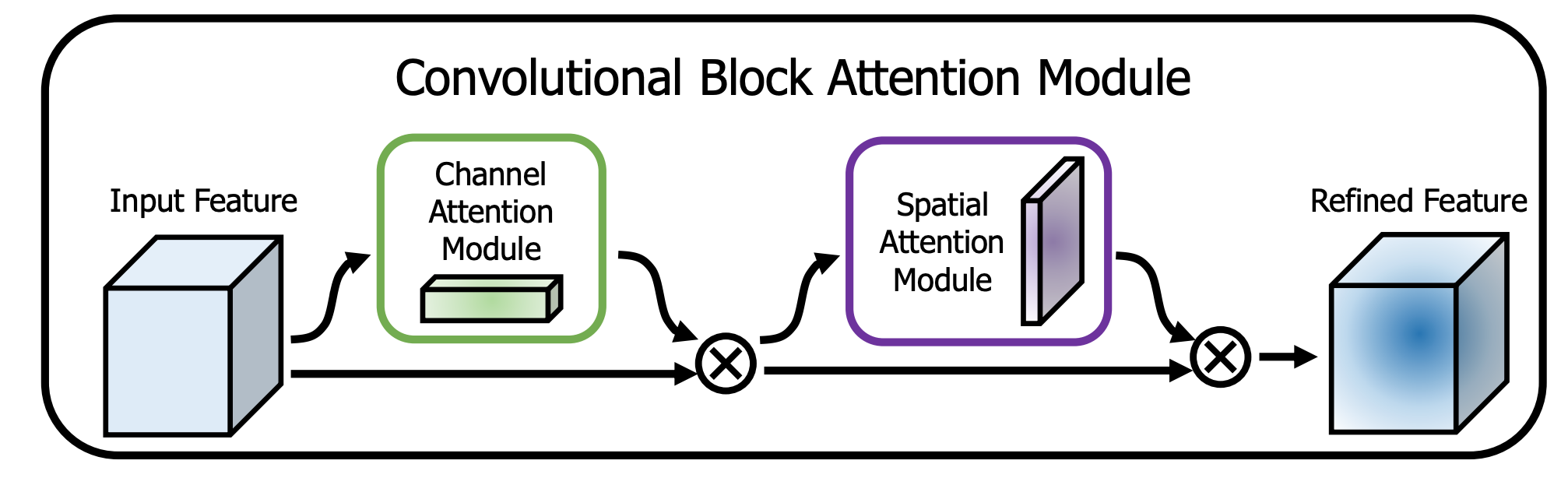

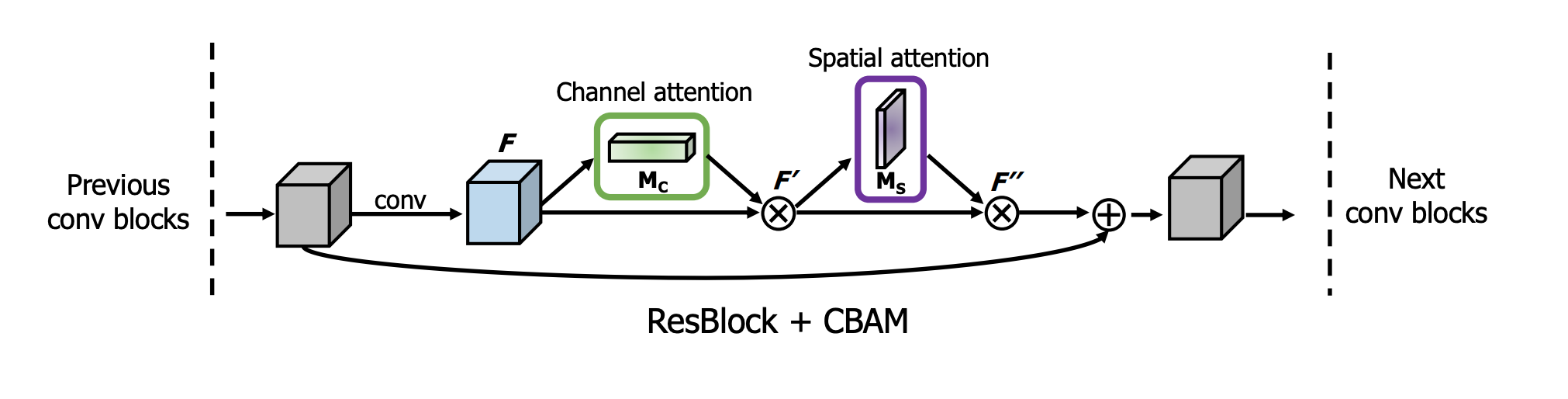

xdef CBAM(input, reduction): """ @Convolutional Block Attention Module """

_, width, height, channel = input.get_shape() # (B, W, H, C)

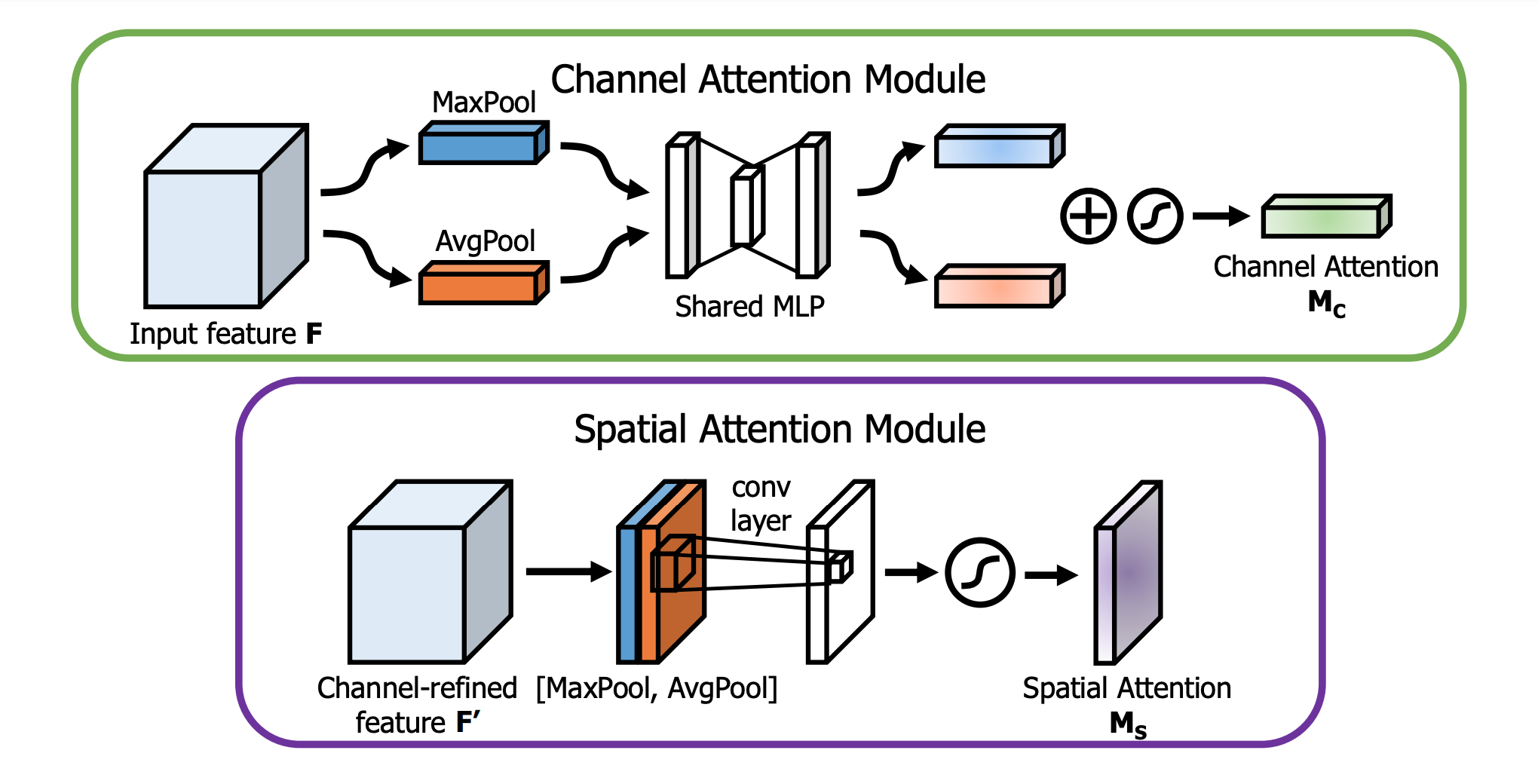

# channel attention x_mean = tf.reduce_mean(input, axis=(1, 2), keepdims=True) # (B, 1, 1, C) x_mean = tf.layers.conv2d(x_mean, channel // reduction, 1, activation=tf.nn.relu, name='CA1') # (B, 1, 1, C // r) x_mean = tf.layers.conv2d(x_mean, channel, 1, name='CA2') # (B, 1, 1, C)

x_max = tf.reduce_max(input, axis=(1, 2), keepdims=True) # (B, 1, 1, C) x_max = tf.layers.conv2d(x_max, channel // reduction, 1, activation=tf.nn.relu, name='CA1', reuse=True) # (B, 1, 1, C // r) x_max = tf.layers.conv2d(x_max, channel, 1, name='CA2', reuse=True) # (B, 1, 1, C)

x = tf.add(x_mean, x_max) # (B, 1, 1, C) x = tf.nn.sigmoid(x) # (B, 1, 1, C) x = tf.multiply(input, x) # (B, W, H, C)

# spatial attention y_mean = tf.reduce_mean(x, axis=3, keepdims=True) # (B, W, H, 1) y_max = tf.reduce_max(x, axis=3, keepdims=True) # (B, W, H, 1) y = tf.concat([y_mean, y_max], axis=-1) # (B, W, H, 2) y = tf.layers.conv2d(y, 1, 7, padding='same', activation=tf.nn.sigmoid) # (B, W, H, 1) y = tf.multiply(x, y) # (B, W, H, C)

return y