关于Densenet的实验🧪

论文解读

地址:https://arxiv.org/pdf/1608.06993.pdf

近期突发奇想,重新拿起了这篇2017年的oral重新看了一下,从Googlenet到VGG-19,Incepetion等模型,到Resnet的出现,在模型中首次使用了跳层连接,输出到输入到桥梁连接起来,训练出了更深的网络模型,特征层的提取更加深入。

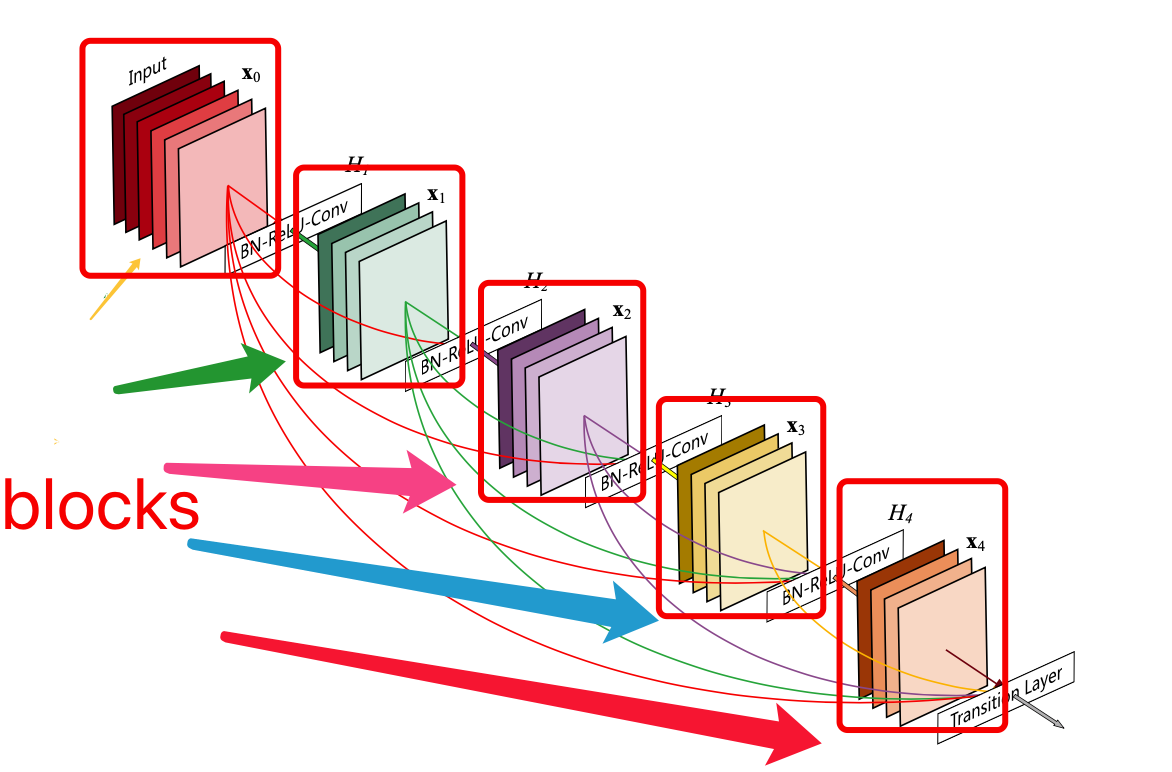

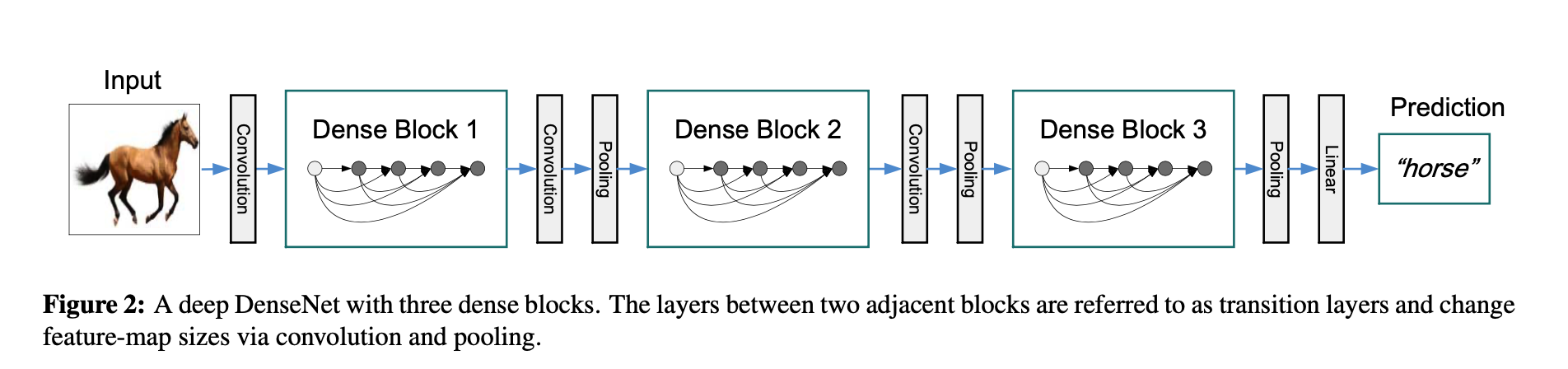

Densenet提出了更加有力的连接方式,就是看起来复杂度更高的密集连接,将每一个block里面的每一个层都和前面所有层连接,为了更清楚一点描述这个问题,引用一下加工过的论文原图:

每一个多层结构,都是一个块状结构,它包含多个layers,每一个layers都会和前面的所有层,通过通道连接到一起,实现就是试用torch的torch.cat()函数,一般的输出都是通过一个激活函数(非线性)

而Densenet的输出不太一样,他是叠加了前面所有层的输出,在channnels维度上进行连接

Network 结构:

前向传播:

Block实现:

其中Densenet的block包含着多个结构,存在多个conv或者bn的过程,定义了一个block函数,使用一个list来储存layers,numblock就是block也就是首页图中的红框的个数,每一个红框都代表一个瓶颈层,growth_rate参数的意思是通道生长率,我们都知道,卷积核的个数,代表了数据输出维度的第三个维度,通道数是我们用来连接的维度,故为防止通道过多,设置不能过大的通道生长率有很重要的意义,剩下的参数input_channels,就是输入图像的通道数,可以看到我们在函数里,把这个设置为当前通道数,在每一次结束的时候,现在的通道数都会增加,增加的就是生长率的部分。

71def Dense_Block(num_blocks,growth_rate,input_channels):2 layers = []3 now_channel = input_channels4 for i in range(num_blocks):5 layers.append(Bottleneck(input_channels = now_channel,growth_rate = growth_rate))6 now_channel += growth_rate7 return nn.Sequential(*layers)bottleneck实现:

首先,参数含义:

生长率:每一个layer的输出特征图数目

输入尺寸:图像尺寸大小

输出尺寸: 论文给出为4倍的生长率,针对1x1卷积核而言

第二个卷积的输出尺度就是生长率的大小

将生长率及其它参数赋值,定义单个layer结构,包含两个卷积和两个bn层,其中conv2d的参数,第一个卷积层,论文中定义的输入通道,和和输出通道的关系就是四倍的生长率,而这个值,代表了我们有多少个卷积核参与backward,这样的闭环关系,卡了我挺久的没看明白,最后前向的过程,就是每一个层都链接了前面的通道,用的。

We find this design es- pecially effective for DenseNet and we refer to our network with such a bottleneck layer, i.e., to the BN-ReLU-Conv(1× 1)-BN-ReLU-Conv(3×3) version of Hl, as DenseNet-B. In our experiments, we let each 1×1 convolution produce 4k feature-maps.

xxxxxxxxxx1class Bottleneck(nn.Module):2 def __init__(self,input_channels,growth_rate):3 super().__init__()4 #生长率:每一个layer的输出特征图数目5 #输入尺寸:图像尺寸大小6 #输出尺寸: 论文给出为4倍的生长率,针对1x1卷积核而言7 #第二个卷积的输出尺度就是生长率的大小8 self.growth_rate = growth_rate9 self.input_channels = input_channels10 self.output_1x1_channels = 4*self.growth_rate11 self.layers = nn.Sequential(12 nn.BatchNorm2d(self.input_channels),13 nn.ReLU(), nn.Conv2d(self.input_channels,self.output_1x1_channels,1,padding=0,bias=False),14 nn.BatchNorm2d(self.output_1x1_channels),15 nn.ReLU(),16 nn.Conv2d(self.output_1x1_channels,self.growth_rate,kernel_size = 3,stride=1,padding=1,bias = False)17 )18 def forward(self,x):19 out = self.layers(x)20 out = torch.cat((x,out),dim=1)21 return outTransition Layer:

从上述结构图结构图中可以看到,每两个block之间的结构不只是直接连接输出变为输入的方式,而是通过非线性的方式,进一步整理faeture的大小,论文中把这部分称为Transition,过渡层,实现如下:

参数:

input_channels :输入通道大小

output_channels: 输出通道大小

layer定义:

- bn层归一化

- 激活层(Relu)

- 卷积层(1*1卷积核,改变通道数目,降维作用)

- Pooling 改变faeture map大小,可能也有减少参数的作用

xxxxxxxxxx1class TransitionLayer(nn.Module):2 def __init__(self,input_channels,output_channels):3 super().__init__()4 self.input_channel = input_channels5 self.output_channel = output_channels6 self.layers = nn.Sequential(7 nn.BatchNorm2d(input_channels),8 nn.ReLU(),9 nn.Conv2d(in_channels = input_channels,out_channels=output_channels,kernel_size = 1,stride=1,padding=0,bias=False),10 nn.AvgPool2d(kernel_size=2)11 )12 def forward(self,_input):13 out_put = self.layers(_input)14 return out_putDensenet类:

DenseNet核心代码

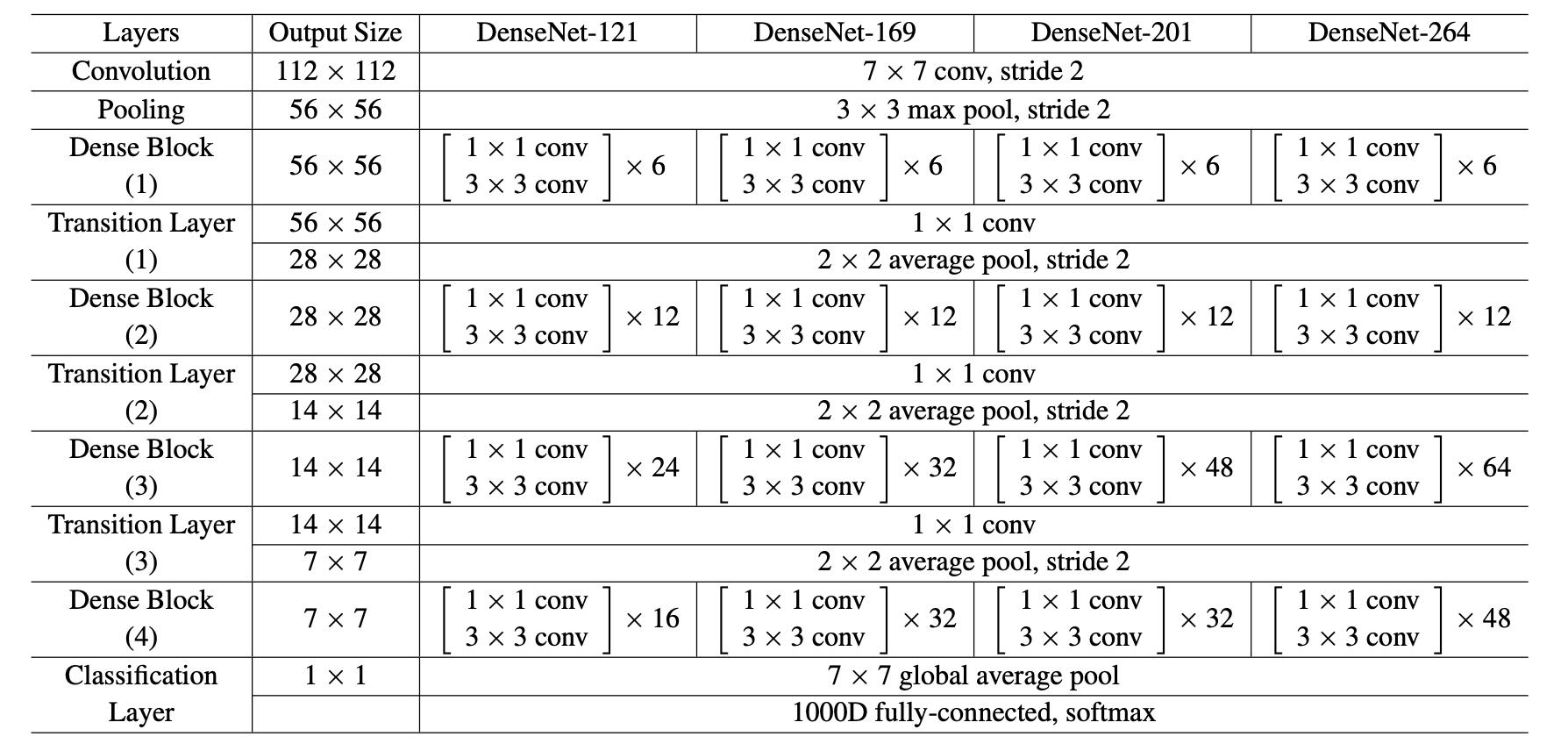

param growth_rate: 增长率 param channels_in: 输入数据通道数 param num_dense_block: 需要几个Dense Block,暂时不用此参数 param num_bottleneck: 用list表示每个DenseBlock包含的bottleneck个数,如list(6, 12, 24, 16)表示DenseNet121 param num_channels_before_dense: 第一个卷积层的输出通道数 param compression: 压缩率,Transition层的输出通道数为Compression乘输入通道数 param num_classes:类别数

xxxxxxxxxx1class DenseNet(nn.Module):2 def __init__(self, growth_rate, channels_in, num_dense_block, num_bottleneck, num_channels_before_dense, compression, num_classes):3 super().__init__()4 self.growth_rate = growth_rate5 self.channel_in = channels_in6 self.num_dense_block = num_dense_block7 self.num_bottleneck = num_bottleneck89 # 1:定义第1个卷积层10 self.first_conv = Header_conv(input_channels=channels_in,output_channels=num_channels_before_dense)1112 # 2:定义第1个Dense Block13 self.dense_1 = Dense_Block(num_blocks=num_bottleneck[0], input_channels=num_channels_before_dense,14 growth_rate=growth_rate)15 dense_1_out_channels = int(num_channels_before_dense + num_bottleneck[0]*growth_rate)16 self.transition_1 = TransitionLayer(input_channels=dense_1_out_channels,17 output_channels=int(compression*dense_1_out_channels))1819 # 3:定义第2个Dense Block20 self.dense_2 = Dense_Block(num_blocks=num_bottleneck[1], input_channels=int(compression*dense_1_out_channels),21 growth_rate=growth_rate)22 dense_2_out_channels = int(compression*dense_1_out_channels + num_bottleneck[1]*growth_rate)23 self.transition_2 = TransitionLayer(input_channels=dense_2_out_channels,24 output_channels=int(compression*dense_2_out_channels))2526 # 4:定义第3个Dense Block27 self.dense_3 = Dense_Block(num_blocks=num_bottleneck[2], input_channels=int(compression * dense_2_out_channels),28 growth_rate=growth_rate)29 dense_3_out_channels = int(compression * dense_2_out_channels + num_bottleneck[2] * growth_rate)30 self.transition_3 = TransitionLayer(input_channels=dense_3_out_channels,31 output_channels=int(compression * dense_3_out_channels))3233 # 5:定义第4个Dense Block34 self.dense_4 = Dense_Block(num_blocks=num_bottleneck[3],35 input_channels=int(compression * dense_3_out_channels),36 growth_rate=growth_rate)37 dense_4_out_channels = int(compression * dense_3_out_channels + num_bottleneck[3] * growth_rate)3839 # 6:定义最后的7x7池化层,和分类全连接层40 self.BN_before_classify = nn.BatchNorm2d(num_features=dense_4_out_channels)41 self.pool_before_classify = nn.AvgPool2d(kernel_size=7)42 self.classify = nn.Linear(in_features=dense_4_out_channels, out_features=num_classes)434445 def forward(self, x):46 out_1 = self.first_conv(x)47 # print(out_1.shape)48 out_2 = self.transition_1(self.dense_1(out_1))49 # print(out_2.shape)50 out_3 = self.transition_2(self.dense_2(out_2))51 # print(out_3.shape)52 out_4 = self.transition_3(self.dense_3(out_3))53 # print(out_4.shape)54 out_5 = self.dense_4(out_4)55 # print(out_5.shape)56 out_6 = self.BN_before_classify(out_5)57 # print(out_6.shape)58 out_7 = self.pool_before_classify(out_6)59 # print(out_7.shape)60 out_8 = self.classify(out_7.view(x.size(0), -1))61 return out_862# x = torch.randn(size=(4, 3, 224, 224))63densenet = DenseNet(channels_in=3, compression=0.5, growth_rate=12, num_classes=10,num_bottleneck=[6, 12, 24, 16],64 num_channels_before_dense=32,65 num_dense_block=4)实验结果🧪:

优化器和损失配置:

xxxxxxxxxx21criterion = nn.CrossEntropyLoss()2optimizer = optim.SGD(densenet.parameters(), lr=0.0001, momentum=0.9)epoch: 1 [Epoch 1, Batch 100] loss: 3.697 [Epoch 1, Batch 200] loss: 3.665 [Epoch 1, Batch 300] loss: 3.646

epoch: 2 [Epoch 2, Batch 100] loss: 3.626 [Epoch 2, Batch 200] loss: 3.614 [Epoch 2, Batch 300] loss: 3.604

epoch: 3 [Epoch 3, Batch 100] loss: 3.591 [Epoch 3, Batch 200] loss: 3.581 [Epoch 3, Batch 300] loss: 3.572

epoch: 4 [Epoch 4, Batch 100] loss: 3.557 [Epoch 4, Batch 200] loss: 3.543

剩下的实验going🏃

不同bs单卡情况下显存使用情况:

Bs: 512

Input_size: (64,64,3)

+-------------------------------+----------------------+----------------------+ | 6 GeForce GTX 108... On | 00000000:8A:00.0 Off |

| 31% 53C P2 75W / 250W | 4065MiB / 11178MiB |

+-------------------------------+----------------------+----------------------+Bs:64

Input_size: (224,224,3)

+-------------------------------+----------------------+----------------------+ | 5 GeForce GTX 108... On | 00000000:89:00.0 Off | N/A | | 32% 53C P2 75W / 250W | 10937MiB / 11178MiB | +-------------------------------+----------------------+----------------------+