Monodepth/SFMLearner自监督深度估计复现

author:张一极

date:2024年01月06日22:34:06

相关paper:Unsupervised Learning of Depth and Ego-Motion from Video

1.基本原理:

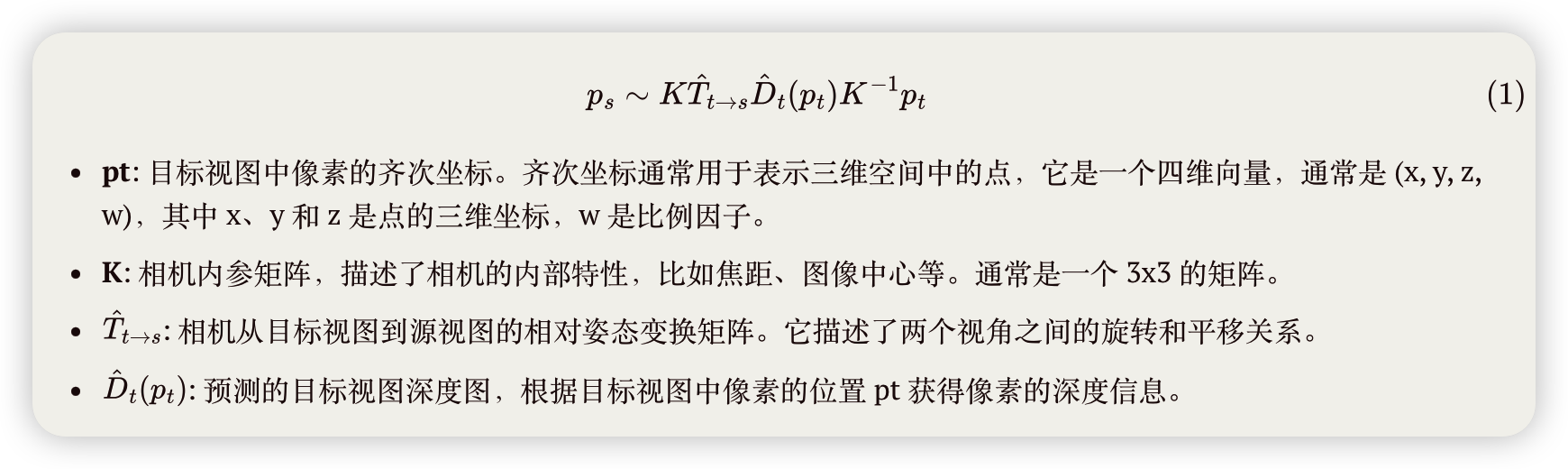

关于自监督进行单目深度估计的思路,首先数据集采用视频流的多帧形式,监督信号来自于上下帧的重建差异,核心重建算法即:

pt: 目标视图中像素的齐次坐标。齐次坐标通常用于表示三维空间中的点,它是一个四维向量,通常是 (x, y, z, w),其中 x、y 和 z 是点的三维坐标,w 是比例因子。

K: 相机内参矩阵,描述了相机的内部特性,比如焦距、图像中心等。通常是一个 3x3 的矩阵。

公式的含义是,通过相机内参矩阵 K、目标视图到源视图的相对姿态变换矩阵

接着进行图像比较,

I_t(p): 目标视图中像素 p 处的真实像素值。

s: 源视图的索引,表示训练图像序列中的不同视角。损失函数对于训练集中的所有源视图进行求和。

p: 像素索引,表示图像中的特定像素位置。

针对整个图像,计算一个重建损失,利用可微分的重建损失,得到最后的监督信号,所以他可以实现持续学习,在不断读入下一帧的同时针对上一帧进行重建,同时计算重建损失后,进行参数调整。

2.复现代码:

总体训练流程:

a.定义数据集

ximg_directory = 'xxx'label_directory = 'xxx'data_transform = transforms.Compose([ transforms.ToTensor(),])dataset = SFMLDataset(img_directory, label_directory, transform=data_transform)

b.定义模型和损失函数:

xxxxxxxxxxpose_model = PoseExpNet(num_source=1, do_exp=True).to(device)pos_weight = torch.tensor(100.0) disp_model = DispNet(in_channels=3, out_channels=1).to(device)parameters = list(pose_model.parameters()) + list(disp_model.parameters())criterion = PositiveWeightedMSELoss(pos_weight)

c.定义训练流程:

xxxxxxxxxxoptimizer = optim.SGD(pose_model.parameters(), lr=0.001,momentum=0.9)optimizer2 = optim.SGD(disp_model.parameters(), lr=0.001,momentum=0.9)num_epochs = 400count = 0for epoch in range(num_epochs): running_loss = 0.0 # Iterate through the dataset for i, data in enumerate(dataset): labels,inputs = data count+=1 # Zero the parameter gradients optimizer.zero_grad() # Forward + backward + optimize inputs = inputs.to(device).unsqueeze(0) pose_final, masks = pose_model(inputs,labels.to(device).unsqueeze(0)) depth_map,conv6output = disp_model(labels.to(device).unsqueeze(0)) print("pose:",pose_final) print("conv6output:",conv6output.shape) intrinsics = generate_camera_intrinsics() result = projective_inverse_warp(inputs.permute(0, 2, 3, 1), depth_map[0], pose_final, intrinsics.to(device),device) loss = rgb_mse_loss(result[0].permute(2, 0, 1), labels.to(device)) loss.backward() before_update_params = {name: param.clone().detach() for name, param in pose_model.named_parameters()} optimizer.step() optimizer2.step() after_update_params = {name: param.clone().detach() for name, param in pose_model.named_parameters()} running_loss += loss.item() if i % 10 == 9: # Print every 10 mini-batches print(f'Epoch [{epoch + 1}/{num_epochs}], ' f'Batch [{i + 1}/{len(dataset)}], ' f'Loss: {running_loss / 10:.4f}') running_loss = 0.0 # torch.save(net.state_dict(), f'./exp/runtime3/{epoch}.pth')

print('Finished Training')3.模型定义部分和变换解析:

深度预测模型(dispnet):

输入为[3,h,w],输出为[1,h,w]:

xxxxxxxxxximport torchimport torch.nn as nnimport torch.nn.functional as F

class DoubleConv(nn.Module): def __init__(self, in_channels, out_channels): super(DoubleConv, self).__init__() self.conv = nn.Sequential( nn.Conv2d(in_channels, out_channels, 3, 1, 1), nn.BatchNorm2d(out_channels), nn.ReLU(inplace=True), nn.Conv2d(out_channels, out_channels, 3, 1, 1), nn.BatchNorm2d(out_channels), nn.ReLU(inplace=True) ) def forward(self, x): return self.conv(x)

class DispNet(nn.Module): def __init__(self, in_channels, out_channels): super(DispNet, self).__init__() self.conv1 = DoubleConv(in_channels, 64) self.pool1 = nn.MaxPool2d(2) self.conv2 = DoubleConv(64, 128) self.pool2 = nn.MaxPool2d(2) self.conv3 = DoubleConv(128, 256) self.pool3 = nn.MaxPool2d(2) self.conv4 = DoubleConv(256, 512) self.pool4 = nn.MaxPool2d(2) self.conv5 = DoubleConv(512, 1024) self.up6 = nn.ConvTranspose2d(1024, 512, 2, 2) self.conv6 = DoubleConv(1024, 512) self.up7 = nn.ConvTranspose2d(512, 256, 2, 2) self.conv7 = DoubleConv(512, 256) self.up8 = nn.ConvTranspose2d(256, 128, 2, 2) self.conv8 = DoubleConv(256, 128) self.up9 = nn.ConvTranspose2d(128, 64, 2, 2) self.conv9 = DoubleConv(128, 64) self.conv10 = nn.Conv2d(64, out_channels, 1) self.conv6_output = nn.Conv2d(512,1,kernel_size = 1) def forward(self, x): conv1 = self.conv1(x) pool1 = self.pool1(conv1) conv2 = self.conv2(pool1) pool2 = self.pool2(conv2) conv3 = self.conv3(pool2) pool3 = self.pool3(conv3) conv4 = self.conv4(pool3) pool4 = self.pool4(conv4) conv5 = self.conv5(pool4) up6 = self.up6(conv5) merge6 = torch.cat([up6, conv4], dim=1) conv6 = self.conv6(merge6) conv6_output = self.conv6_output(conv6) up7 = self.up7(conv6) merge7 = torch.cat([up7, conv3], dim=1) conv7 = self.conv7(merge7) up8 = self.up8(conv7) merge8 = torch.cat([up8, conv2], dim=1) conv8 = self.conv8(merge8) up9 = self.up9(conv8) merge9 = torch.cat([up9, conv1], dim=1) conv9 = self.conv9(merge9) conv10 = self.conv10(conv9) # output = F.sigmoid(conv10) return 1/(10*conv10+0.1),conv10if __name__ == "__main__": # 使用示例 # 假设输入通道数为3(RGB图像),输出通道数为1(单通道预测结果) model = DispNet(in_channels=3, out_channels=1) input_tensor = torch.randn(1, 3, 640, 640) # 输入尺寸为1x3x256x256 output_tensor = model(input_tensor) print(output_tensor.shape) # 打印输出张量的形状,深度信息为[1,h,w]

位姿预测网络:

输入:

tgt_image: 目标图像,维度应为 [批量大小, 3, 高度, 宽度]。src_image_stack: 源图像的堆栈,维度应为 [批量大小, 3*num_source, 高度, 宽度],其中 num_source 是源图像的数量。

在前向传播中,目标图像和源图像堆栈沿通道维度拼接,形成输入张量 inputs,其维度为 [批量大小, 6, 高度, 宽度]。

输出:

pose_final: 预测的相机姿态,维度为 [批量大小, 6]。前三个值对应平移向量,后三个值对应旋转向量。[mask4, ...]: (可选)一个列表,包含解释性掩码的预测结果。如果do_exp=True,则返回掩码列表,否则为 None。每个掩码的维度为 [批量大小, num_source*2, 高度//16, 宽度//16]。

xxxxxxxxxximport torchimport torch.nn as nn

class PoseExpNet(nn.Module): def __init__(self, num_source, do_exp=True): super(PoseExpNet, self).__init__() self.do_exp = do_exp # Define layers for pose prediction self.conv1 = nn.Conv2d(6, 16, kernel_size=7, stride=2) self.conv2 = nn.Conv2d(16, 32, kernel_size=5, stride=2) self.conv3 = nn.Conv2d(32, 64, kernel_size=3, stride=2) self.conv4 = nn.Conv2d(64, 128, kernel_size=3, stride=2) self.conv5 = nn.Conv2d(128, 256, kernel_size=3, stride=2) self.conv6_pose = nn.Conv2d(256, 256, kernel_size=3, stride=2) self.conv7_pose = nn.Conv2d(256, 256, kernel_size=3, stride=2) self.pose_pred = nn.Conv2d(256, 6, kernel_size=1)

if self.do_exp: self.upconv5 = nn.ConvTranspose2d(256, 256, kernel_size=3, stride=2) self.upconv4 = nn.ConvTranspose2d(256, 128, kernel_size=3, stride=2) self.mask4 = nn.Conv2d(128, num_source * 2, kernel_size=3, stride=1)

def forward(self, tgt_image, src_image_stack): inputs = torch.cat([tgt_image, src_image_stack], dim=1) # Pose specific layers x = nn.ReLU()(self.conv1(inputs)) x = nn.ReLU()(self.conv2(x)) x = nn.ReLU()(self.conv3(x)) x = nn.ReLU()(self.conv4(x)) x = nn.ReLU()(self.conv5(x)) pose_x = nn.ReLU()(self.conv6_pose(x)) pose_x = nn.ReLU()(self.conv7_pose(pose_x)) pose_pred = self.pose_pred(pose_x) pose_avg = torch.mean(pose_pred, dim=[2, 3]) # pose_final = 0.01 * pose_avg.view(-1, 6)

pose_final = 0.1*pose_avg.view(-1, 6) # Exp mask specific layers if self.do_exp: upconv5 = nn.ReLU()(self.upconv5(x)) upconv4 = nn.ReLU()(self.upconv4(upconv5)) mask4 = self.mask4(upconv4) # Define computations for other mask layers similarly return pose_final, [mask4, ...] # Return mask outputs return pose_final, None # If do_exp is False, return None for masks通过深度网络和姿态网络的输出,得到输入像素映射算法的参数,即:

这里的pt和